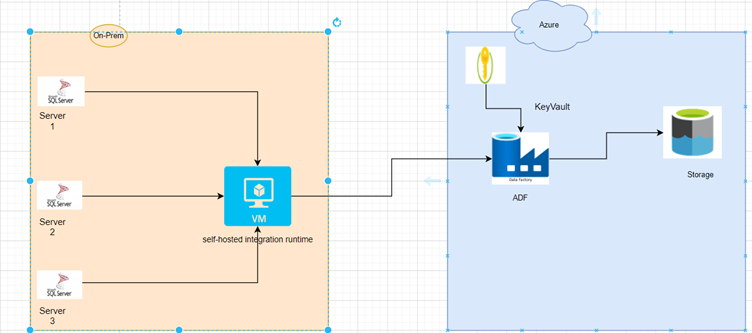

Data ingestion from different on-premises SQL systems to Azure storage involves securely transferring and storing data from various on-premises SQL databases into Azure data storage solutions like Azure Data Lake Storage, Azure Blob Storage, or Azure SQL Data Warehouse. This data movement is essential for organizations looking to centralize, analyze, and leverage their data within the Azure cloud environment.

Business Scenario

The demand for swift, informed decision-making is paramount in the contemporary business landscape. Organizations seek tools capable of swiftly generating insightful reports and dashboards by consolidating data from diverse, critical aspects of their operations.

Envision a scenario where data from multiple pivotal systems seamlessly converges into a readily accessible hub. Enter Azure’s robust Data Integration service—Azure Data Factory. This service excels at aggregating data from disparate systems, enabling the creation of a comprehensive data and analytics platform for businesses. Frequently, we deploy this solution to fulfill our customers’ data and analytics requirements, providing them with a powerful toolset for informed decision-making.

Business Challenges

Below are some challenges that may be faced during the data ingestion process to Azure.

- If SQL servers are outdated and change, the data capture mechanism doesn’t support incremental loads. Additional efforts are needed to implement gradual data change functionality, like creating control tables.

- The data format will have some challenges if data is stored in storage accounts instead of databases on Azure. The parquet format helps fix this problem.

Solution Strategy

- Identify the source entities\views\tables from the database system. Also, identify the column that needs to be used for incremental changes (mostly date column preferred in table\view).

- Install and configure the self-hosted integration run time on an on-premises server with access to SQL servers.

- Create a Key Vault to store credentials. These credentials are useful during link services creation in Azure Data Factory.

- Create a source file and add all the source system tables into the tab for each source. Future table additions\deletions\updates will happen through this file only.

- Create a similar type of file for incremental loads. This file will include a column name that refers to incremental changes.

- Create source and destination link services.

- Create source and destination datasets for associated tables\views in the database.

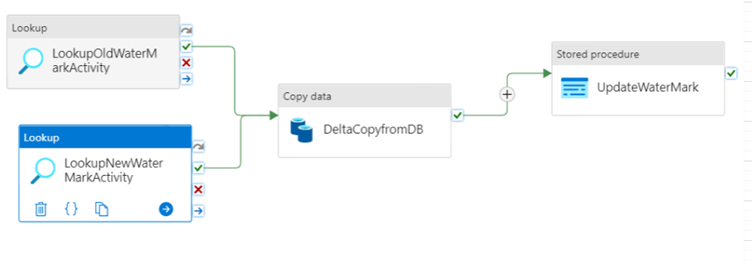

- Create a watermark table and store procedure in a Serverless Azure SQL table. It is required for incremental loads.

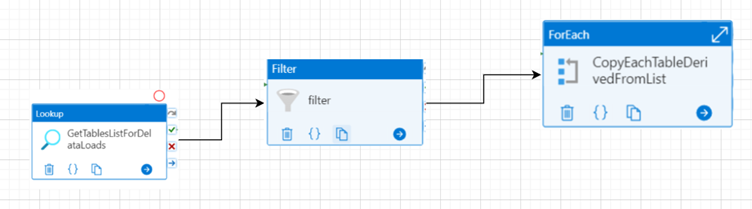

- Create an entire load pipeline. The pipeline uses previously created source and destination link services and datasets. It also uses lookup and filter activity only to collect the data from mentioned tables in the source file.

- Follow similar instructions for the incremental load pipeline with additional steps to get the data difference from the previous copy to the current one using watermark column values.

- Schedule the pipelines and add a monitor to notify upon failures.

- Validate data by counting rows and sample row data on both sides.

- Validate watermark table updates upon incremental load pipeline execution.

Fig 1: Full Load Sample Pipeline Structure

Fig 2: Incremental Loads Sample Pipeline Structure

Fig 3: Look up

Outcome & Benefits

- Design the entire solution with parameterization. It can be replicated in multiple projects to reduce repetitive efforts.

- ADF supports automated and scheduled data ingestion.

- A robust system for monitoring and logging errors, facilitating seamless troubleshooting.

- ADF supports 100+ connectors as of today.

Conclusion

Are you ready to transform your data management and unlock valuable insights within your organization? Take the first step towards a more data-driven future by exploring our data ingestion solutions today. Contact our data and analytics experts to discuss your needs and embark on a journey towards enhanced data utilization, improved business intelligence, and better decision-making.

Mastanvali Shaik

Solution Architect – Managed Services

Recent Blogs