In the demanding world of biopharmaceuticals, where every discovery, every research finding, and every collaborative decision can impact patient lives, efficiency and innovation are paramount. Companies in this sector are constantly striving to accelerate their scientific endeavors, streamline complex research and development cycles, and ensure seamless communication across geographically dispersed teams. However, many find their progress hampered by outdated collaboration tools, fragmented data repositories, and a lack of robust governance.

This challenge is not unique to a single organization; it’s a common pain point across the biopharmaceutical industry. As a Microsoft Solutions Partner, Cambay Solutions frequently encounters organizations grappling with content sprawl, limited information discoverability, and an overall suboptimal user experience that hinders productivity. This article delves into a real-world case where a leading biopharmaceutical company, committed to developing therapies for serious and rare diseases, embarked on a transformative journey to overcome these very obstacles, establishing a new benchmark for collaborative excellence.

The Imperative for Change: Addressing the Collaboration Conundrum

Our client, a prominent player in the biopharmaceutical industry, recognized that their existing collaboration infrastructure was no longer equipped to support their ambitious growth and innovation objectives. The challenges they face are familiar to many organizations operating in highly regulated and data-intensive fields:

- Content Governance Deficiencies: A lack of centralized control over content creation, storage, and lifecycle management led to inconsistencies, version control issues, and difficulty in ensuring compliance with stringent industry regulations. In biopharma, proper governance is not just about efficiency; it’s about maintaining data integrity and meeting regulatory mandates.

- Information Silos and “Site Sprawl”: Over time, numerous departmental data stores and ad-hoc collaboration sites have emerged. This fragmentation created isolated pockets of information, making it incredibly difficult for employees to locate essential documents, leading to duplicated efforts and hindering cross-functional insights critical for research and development.

- Limited Discoverability of Crucial Data: Even when content existed within the various systems, finding it was often a time-consuming and frustrating exercise. Inefficient search capabilities meant that valuable research data, clinical trial results, and strategic insights remained hidden, preventing teams from leveraging existing knowledge and accelerating new initiatives.

- Suboptimal User Experience: The disjointed nature of their collaboration tools resulted in a clunky, inconsistent, and often frustrating user experience. This not only impacted on employee morale and productivity but also created resistance to adopting new tools, undermining the very collaborative spirit the organization sought to foster.

- Scalability Concerns: With aggressive plans for future expansion, increasing data volumes, and a growing workforce, the existing infrastructure lacked the scalability and flexibility to support the company’s long-term vision. They needed a future-proof solution capable of evolving with their dynamic business needs.

Recognizing these critical challenges, the biopharmaceutical leader sought a comprehensive, scalable, and secure solution that would not only resolve immediate pain points but also establish a robust foundation for enhanced collaboration and productivity.

The Solution: “Collab 2.0” – A Microsoft Teams-Powered Transformation

To address these multifaceted challenges, Cambay Solutions, drawing upon its deep expertise as a Microsoft Solutions Partner, designed and implemented a comprehensive collaboration platform, internally referred to as “Collab 2.0.” This solution was strategically built upon the Microsoft Teams platform, leveraging its inherent capabilities for unified communication, integrated applications, and seamless integration with the broader Microsoft 365 ecosystem.

The technical architecture was meticulously crafted to deliver a highly functional, secure, and user-friendly environment:

- Microsoft Teams as the Central Collaboration Hub: At the core of “Collab 2.0” was Microsoft Teams, which provided a single, centralized platform for all communication, virtual meetings, real-time document co-authoring, and application access. Teams’ channel-based structure allowed organized departmental and project-specific collaboration, effectively dismantling existing information silos. This facilitated more agile and responsive project management, critical in fast-paced research environments.

- SharePoint Online for Enterprise Content Management: We leveraged SharePoint Online as the robust backbone for all content management. SharePoint’s enterprise-grade features delivered secure document storage, comprehensive version control, granular access permissions, and advanced document management capabilities. This directly addressed the content governance and discoverability challenges, providing a structured, searchable, and compliant repository for all critical scientific and operational documents.

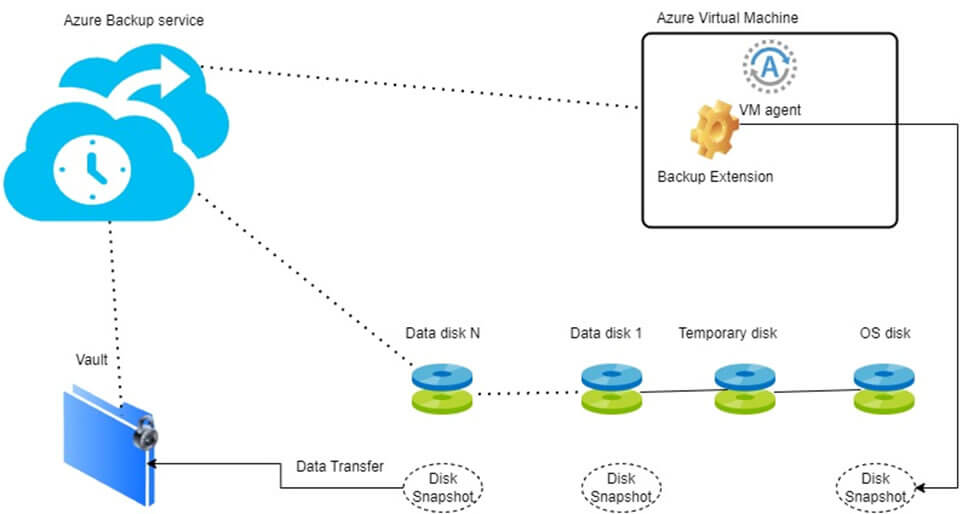

- Azure Functions for Automated Processes: To enhance efficiency and ensure data integrity, custom Azure Functions were developed. These serverless computing components automated various critical processes, including complex data migration tasks from legacy systems and routine backup operations. This automation minimized manual intervention, reduced the risk of human error, and ensured the reliable handling of sensitive biopharmaceutical data during the transition and ongoing operations.

- PowerApps for Tailored Business Applications: The agility of the Microsoft Power Platform was harnessed through PowerApps. Custom applications were developed to cater to specific business needs, such as a streamlined PTO (Paid Time Off) request management system. This demonstrated the flexibility of the platform in extending Teams’ functionality to automate and improve various internal workflows, enhancing overall operational efficiency and the user experience.

- PnP Search Webparts for Enhanced Content Discoverability: To overcome the challenge of limited information discoverability, the user interface within Teams was significantly enhanced using PnP Search Webparts. These powerful web parts provided a highly robust and intuitive search experience, enabling users to quickly and accurately locate the information they needed, irrespective of its location within the new environment. This significantly improved knowledge sharing and accelerated access to vital research and operational data.

Phased Execution: A Smooth Transition to Modern Collaboration

The implementation of “Collab 2.0” was executed through a carefully planned, phased approach, designed to minimize disruption to the client’s critical operations while ensuring maximum impact:

- Initial Health Check and Assessment: Cambay Solutions began with a comprehensive health check of the client’s existing IT infrastructure and collaboration landscape. This crucial first step involved a detailed assessment of their current content, identification of key pain points, and a deep understanding of specific departmental needs and workflows. This thorough analysis formed the bedrock for a truly tailored and effective solution design.

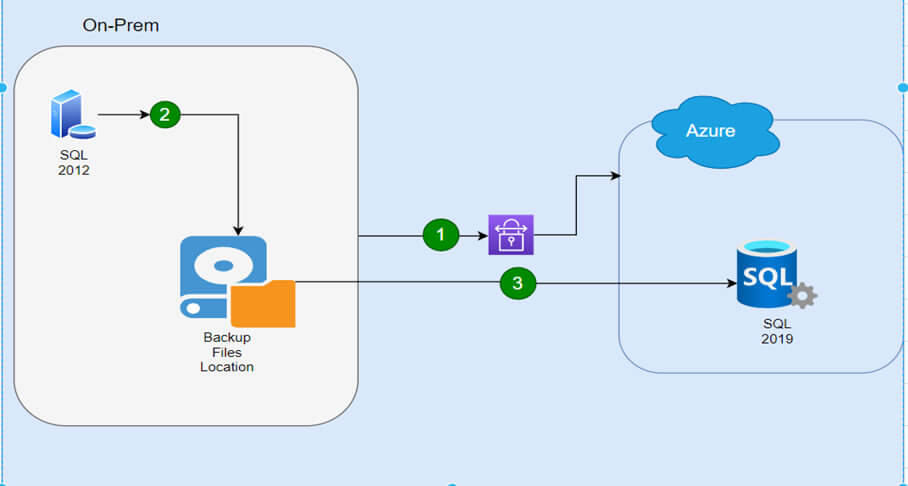

- Solution Development and Content Migration: Following the detailed design phase, Cambay Solutions proceeded with the development of the “Collab 2.0” solution within Microsoft Teams and SharePoint Online. Simultaneously, a meticulous and large-scale content migration process commenced. This involved the secure transfer of a significant volume of documents, files, and other critical data from seven different legacy data stores to the new Microsoft Teams environment. This complex undertaking was carefully planned and executed to minimize operational disruption and ensure absolute data integrity. The custom Azure Functions played a pivotal role in automating and streamlining critical aspects of this migration.

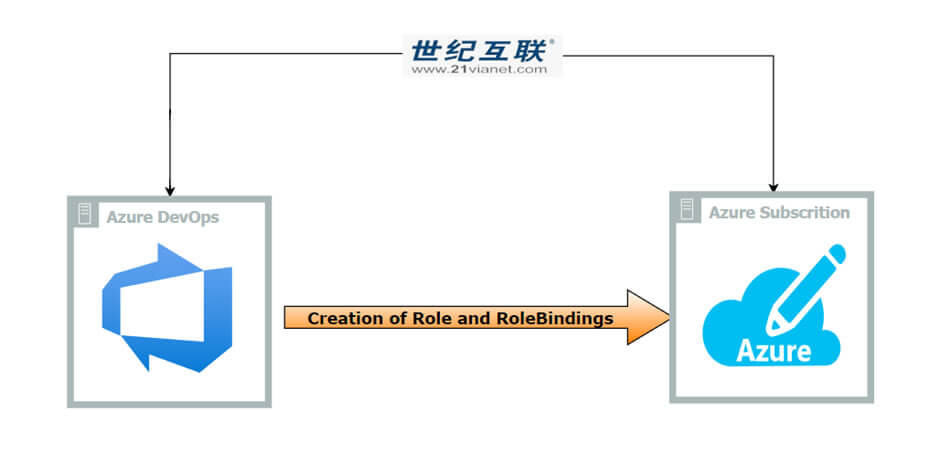

- Comprehensive Governance Implementation: Recognizing the paramount importance of a secure and compliant environment within the biopharmaceutical sector, a robust governance framework was established. This included defining clear policies for content creation, data retention, access control, and security within Microsoft Teams and SharePoint. This proactive approach ensured that the client’s sensitive intellectual property and regulated data remained protected, and that all relevant compliance requirements were met. This also laid the essential foundation for long-term sustainability and efficient management of the new platform.

Navigating Challenges: A Collaborative Path to Success

Large-scale digital transformations, particularly in complex industries like biopharma, often present unique challenges. Cambay Solutions, in close collaboration with the client’s internal teams, approached these with a proactive and problem-solving mindset:

- Integration Complexity: Integrating the new Microsoft 365 environment with the client’s existing legacy infrastructure posed significant technical complexities. This was effectively managed through detailed planning sessions, extensive technical collaboration between Cambay Solutions and the client’s IT stakeholders, and a phased integration strategy. APIs and custom connectors were leveraged to ensure seamless data flow and functionality between disparate systems.

- User Adoption: Ensuring widespread user adoption of new tools and processes is frequently the most critical aspect of any digital transformation. Cambay Solutions implemented a multi-faceted user adoption strategy, recognizing that technology’s value is realized only when embraced by its users. This strategy included:

- Tailored Training Programs: Extensive training sessions were conducted for all seven departments, focusing on the core functionalities of Microsoft Teams, SharePoint Online, and the custom PowerApps. These sessions were designed to be highly practical, emphasizing real-world use cases relevant to each department’s daily activities.

- Comprehensive Documentation and Support: User guides, FAQs, and quick reference materials were developed and made readily accessible within the new Teams environment, providing self-service support and reinforcing training.

- Internal Champion Network: Key individuals from each department were identified and empowered to become “champions,” acting as internal advocates and providing peer-to-peer support, which significantly boosted overall adoption rates.

- Open Communication and Feedback Loops: Transparent communication channels were established to gather user feedback, address concerns promptly, and iteratively refine the solution to better meet evolving user needs, fostering a sense of ownership among the user base.

- Scale of Content Migration: The sheer volume and diversity of content being migrated from seven distinct legacy data stores presented a substantial logistical and technical undertaking. This challenge was managed through:

- Phased Migration Strategy: The migration was meticulously broken down into manageable phases, often executed department by department, to minimize risk, allow for iterative improvements, and ensure business continuity.

- Automated Tools: The strategic use of Azure Functions for automated data transfer and validation significantly reduced manual effort, accelerated the migration timeline, and minimized the potential for errors.

- Data Cleansing and Standardization: Prior to migration, a thorough data cleansing and standardization process was undertaken. This ensured that only relevant, accurate, and properly formatted data was transitioned to the new environment, improving the overall quality and utility of information within the new system.

- Dedicated Migration Expertise: A specialized team from Cambay Solutions, with deep expertise in large-scale data migration, oversaw the entire process, ensuring smooth execution, proactive troubleshooting, and seamless data integrity.

Project Closure and Transformative Outcomes

The “Collab 2.0” project was successfully brought to completion, marked by a formal closure meeting where all deliverables were reviewed and officially signed off by key stakeholders from the biopharmaceutical company. This milestone represented not just the conclusion of a project, but the successful establishment of a modern, efficient, secure, and scalable collaboration platform that profoundly impacted the client’s operations.

The implementation yielded significant and measurable benefits across the organization:

- Elevated Collaboration and Communication: Microsoft Teams became the singular, central hub for all internal communication, replacing fragmented tools and significantly reducing email clutter. Real-time chat, seamless video conferencing, and structured team channels fostered unprecedented levels of collaboration across diverse departments and global locations. This directly translated into accelerated decision-making and more efficient project execution, particularly crucial for research and development cycles.

- Robust Content Governance and Discoverability: With SharePoint Online serving as the structured backend, the biopharmaceutical leader achieved superior content governance, ensuring consistency, version control, and strict compliance with regulatory standards. The integration of PnP Search Webparts dramatically improved content discoverability, empowering employees to quickly access critical information, reducing wasted time, and significantly enhancing knowledge sharing.

- Streamlined Data Management: The successful migration of content from seven disparate legacy data stores to a centralized Microsoft Teams and SharePoint environment eliminated existing data silos and significantly streamlined data management processes. This resulted in reduced operational overhead, improved data integrity, and a single source of truth for critical information.

- Increased Productivity and Operational Efficiency: The strategic implementation of automation through Azure Functions, coupled with custom PowerApps for specific workflows, streamlined numerous business processes. This reduction in manual tasks allowed employees to dedicate more time to higher-value activities, leading to a demonstrable increase in overall productivity across the organization.

- Future-Ready Scalability: The “Collab 2.0” solution, built on the inherently flexible and scalable Microsoft Cloud, provides the biopharmaceutical client with a future-ready platform. This robust infrastructure can effortlessly accommodate their evolving needs, increasing user base, and growing data volumes, ensuring that their collaboration capabilities will continue to support their ambitious growth trajectory and scientific advancements.

- Empowered and Positive User Experience: By consolidating disparate tools into a single, intuitive interface within Microsoft Teams, the overall user experience was dramatically enhanced. This led to greater user satisfaction, increased engagement, and higher adoption rates of the new collaborative tools, fostering a more positive and productive work environment.

- Strengthened Compliance Posture: The meticulously implemented governance policies and the inherently secure environment built on Microsoft’s enterprise-grade cloud capabilities significantly bolstered the client’s compliance posture, which is non-negotiable for a biopharmaceutical company handling sensitive data and operating under strict regulatory frameworks.

The Path Forward: Continuing the Journey of Innovation

The successful implementation of “Collab 2.0” has established a strong foundation for the biopharmaceutical leader’s ongoing digital transformation journey. Discussions are actively in progress for a subsequent phase of the project, which will focus on further enhancements, the integration of additional features, and the exploration of new capabilities to support the client’s evolving needs. This likely includes leveraging advanced analytics, further integrating AI and machine learning capabilities within Microsoft 365, and optimizing the platform for greater efficiency and scientific innovation.

Partnering for Progress: The Cambay Solutions Advantage

The “Collab 2.0” project exemplifies how Cambay Solutions, as a dedicated Microsoft Solutions Partner, delivers tangible and transformative value to its clients. Our expertise in leveraging the full power of Microsoft technologies – from the collaborative capabilities of Microsoft Teams and the robust content management of SharePoint Online to the automation power of Azure Functions and the bespoke application development with PowerApps – enables us to design and implement tailored solutions that directly address complex business challenges.

Our commitment to our clients is rooted in:

- Deep Technical Expertise: Our team possesses profound knowledge of the Microsoft ecosystem, ensuring that solutions are designed for optimal performance, stringent security, and scalable growth.

- Strategic Planning and Meticulous Execution: We collaborate closely with our clients to understand their unique operational needs, establish clear project objectives, and execute projects through well-defined, phased approaches that minimize risk and maximize impact.

- Unwavering Focus on User Adoption: We understand that technology’s true value is unlocked through widespread user adoption. Our comprehensive user adoption strategies, encompassing targeted training and continuous support, ensure a smooth transition and a maximized return on investment for our clients.

- Prioritizing Governance and Security: Especially in highly regulated industries like biopharma, we place paramount importance on building secure, compliant, and well-governed environments.

- A Partnership for Sustained Success: We view our relationships with clients as long-term partnerships, providing ongoing support, proactive maintenance, and strategic planning for future enhancements to ensure sustained value and continuous innovation.

If your organization, regardless of its industry, is seeking to enhance collaboration, streamline complex operations, improve data governance, and unlock the full potential of your digital workspace, Cambay Solutions is your trusted Microsoft Solutions Partner. Like the biopharmaceutical leader in this case, you can achieve a truly transformative digital experience, empowering your teams, accelerating your strategic objectives, and maintaining a competitive edge in today’s dynamic business landscape. Contact us today to explore how we can tailor a Microsoft-powered solution to meet your unique challenges and drive your success.